Machine Learning for Robotics

The work presented here results from the development of methods and

algorithms that provide robots with the ability to learn from humans,

a research topic also known as Robot Programming by

Demonstration. Currently, my work primarily focuses on:

- Incremental learning of probabilistic models for representing action policies

- Probabilistic control policies that try to satisfy task and environmental constraints

- Compliance control, to allows human users to provide online feedback to robots

- Reinforcement learning

To be able to teach something to a robot, machine learning algorithms alone are not sufficient to provide human users with a complete interactive teaching experience. For it to be complete the robot must be capable of "feeling" the user's intentions and to react accordingly. Therefore, strong energy has to be devoted to understand robot control, robot sensing and to develop teaching interfaces. How can a robot perceive human's intentions? Which are the important sensory modalities? And how should the robot react and control its actions to human-related sensory inputs?

Jump directly to:

Machine Learning for Robotics: Applications

Machine Learning for Robotics: Applications

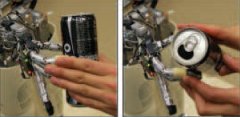

Iterative learning of grasp adaptationIn the context of object interaction and manipulation, one hallmark of a robust grasp is its ability to comply with external perturbations applied to the grasped object while still maintaining the grasp. In this work, we introduce an approach for grasp adaptation, which learns a statistical model to adapt the hand posture based on contact signatures produced by a grasped object. The hand controller is then capable to generate motor commands that comply with the grasp variability extracted by the model from the demonstrations. Moreover, in order to solve the problems of a) partial compliance of the fingers, that limits the range of motions applicable by kinesthetic teaching, and b) the difficulty to guide properly and simultaneously each degree of freedom of a passive hand, we use a multi-step learning procedure. An initial hand posture is first demonstrated to the robot, which is then physically corrected by a human teacher pressing on the compliant fingers of the robot. These correction-replay steps can then be repeated as long as the model's reproduction is not satisfactory. To illustrate this methodology, in addition to this more scientific video, an entertaining compilation of this work with the one on Learning from tactile corrections (see below) can be found here. Work done in collaboration with Brenna Argall |

|

Learning from tactile correctionsThis work presents an approach for policy improvement through tactile corrections for robots endowed with touch sensing capabilities. Our algorithm employs tactile feedback for the refinement of a demonstrated policy, as well as its reuse for the development of other policies. The algorithm is validated on a humanoid robot performing grasp positioning tasks. The performance of the demonstrated policy is found to improve with tactile corrections. Tactile guidance also is shown to enable the development of policies able to successfully execute novel, undemonstrated tasks. We further show that different modalities, namely tele-operation and tactile control, provide useful information about the variability in the target behavior in different areas of the state space. To illustrate this methodology, in addition to this more scientific video, an entertaining compilation of this work with the one on Iterative learning of grasp adaptation (see above) can be found here. Work done in collaboration with Brenna Argall |

|

Demonstration learning on the WAM

Work done in collaboration with Sylvain Calinon |

|

Human-Robot collaboration for space applications

Work done in collaboration with Elena Gribovskaya |

|

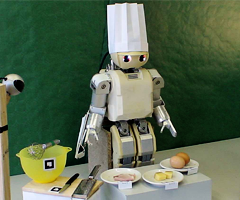

The Chief Cook RobotThis application demonstrates how the Chief Cook Robot is capable of learning how to cook an omelet by whipping eggs, cutting ham and grating cheese. The robot learns these skills, thanks to the guidance of a human teacher, by means of probabilistic learning algorithms. During reproduction of the tasks, the robot can generalize its skills across various situations, e.g., if the objects are moved from trial to trial. Moreover, since the robot was given the ability to observe its environment in real-time, the Chief Cook is robust to external perturbations, e.g., if someone moves its tools while it's doing its job.

Soon in all homes!

Presented during one week at the Work done in collaboration with Sylvain Calinon |

|

Machine Learning for Robotics: Teaching Interfaces

Task-centered active compliance for robotic fingersTraditional robots are usually driven by a high-gain position controller, that makes it impossible for them to react compliantly to external perturbations such as human feedback. Thus, force and compliance control is currently addressed intensively by the robotic research community. Some robotic fingers are designed as cable-driven, which provide them an intrinsic compliance, which is, however, only partial. Therefore, it is now possible to teach robots to move their fingers around, but as well, only partially.

To cope with this problem, the concept developed here is that, through several rounds

of teaching, the robot can, while learning to do its task,

progressively learn how to be more and more

compliant to human feedback. |

|

Tactile sensing, contact clusteringRecently, a part of the robotic research community started to investigate how robots can be endowed with the sense of touch. Within the EU Roboskin Project, a new prototype of a skin-sensor technology has been developed. Here, current efforts are targeted toward developing methodologies for self-calibration, as well as for reducing the amount of information carried by skin sensor-arrays. This video shows the sensory response of this skin and the software used for clustering of contact points. |

|

A low-cost prototype for an artificial skinAs mentioned above, skin technology is part of the future of robotics. However, current technology is still pretty expensive. Here, a low-cost, almost out-of-the-box prototype of skin has been developed. It uses commercially available laptop mouse-pads. By touching and sliding his fingers on these touch-pads, a human user can readily control the robots arm (video). In contrast to tele-operation in joint-space (see below), the advantage of this touch-based method is that there is no correspondence problem at all. Indeed, the teacher directly moves the robot's arm. One may say that the the human commands are embodied in the robot's kinematics. |

|

Robot tele-operation via data-gloves and motion sensorsFor humans, a natural way to teach another human to perform movements is to demonstrate the motions by doing them him/herself. From a set of commercial motion sensors and data-gloves, an interface, that maps the joints angles of a human arm and fingers has been developed. It allows a human teacher to tele-operate a robot humanoid robot, which shares a similar kinematic structure than that of the human. (video) |

|

Robot tele-operation via a commercial joypadYet another, but slightly less natural way to tele-operate a robot, is to use a joystick that commands each degree of freedom of the robot. Such an interface has also been developed, and the following video illustrate its capabilities for robot control. |

|

Computer visionFinally, computer vision is also a key sensory modality for a robot to be able to interact with its environment. Importantly, vision allows robots to plan their actions toward environmental goals, but also, to react to external perturbations. For instance, let's say that the robot is currently whipping eggs in a bowl. Suddenly, for an arbitrary reason, the bowl is moved. Thanks to vision, the robot will be able to adapt its movements toward the new location of the bowl. (video) |

|